I hope this is fixed soon. However, here is the workaround that is working for me for kernel version 6.1.0-18-amd64. BTW: this is the current one used in Debian 12 Bookworm. So, here is how I fixed it.

Remove Latest Kernel Since NVIDIA Broke

Once this is fixed I will remove this from here. But for now this is how I got the latest Debian 12 to load NVIDIA and work with Docker.

sudo apt purge nvidia*

sudo apt remove linux-image-6.1.0-18-amd64 linux-headers-6.1.0-18-amd64

sudo apt install -y linux-image-6.1.0-17-amd64 linux-headers-6.1.0-17-amd64

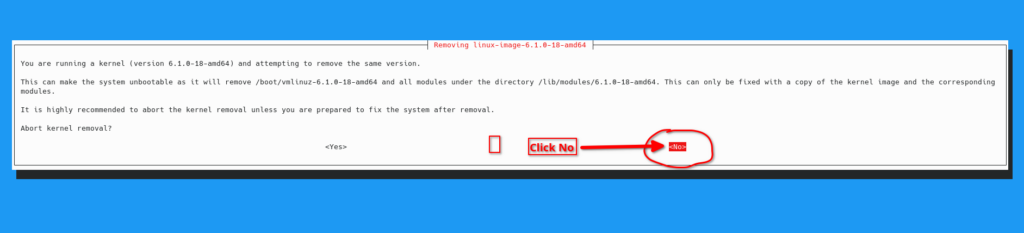

sudo apt-mark hold linux-image-6.1.0-17-amd64 linux-headers-6.1.0-17-amd64Also this will appear

You have to click No to continue removal of kernel. And yes this is a big deal!!! I hope you are doing this on a new VM and have a snapshot of it prior to doing this!!! You have been warned!!!

Reboot After Hold Is Placed

After the hold and not before because most of these images want to upgrade to the latest. Thus, may revert changes after reboot. Placing a hold on the kernel should force it to stay at 6.1.0-17 until the stupid NVIDIA bug is fixed. Then afterwards a unhold can be done. Note: I will update that in the future once issue is addressed.

Blacklist Nouveau

Also before reboot this is a good time to blacklist the nouveau driver that may be in the way of installing NVIDIA

run below to remove it from modules.

cat << 'EOF' | sudo tee /etc/modprobe.d/blacklist-nouveau.conf

blacklist nouveau

options nouveau modeset=0

EOF

sudo update-initramfs -uDetermine Need Drivers

For NVIDIA there are a few types of drivers see NvidaGraphicDrivers for more info. Also, install this to detect the recommended drivers

First, add needed repositories using add-repository. Note, you can do this manually too. However, for brevity I added this way. It is up to you the way you want to add the non default repositories required to install NVIDIA

sudo apt install -y software-properties-common

sudo apt-add-repository contrib non-free non-free-firmwareApparently, there is a bug in apt-add-repository so have to do it the hard way!

sudo nano /etc/apt/sources.list.d/debian.sourcesBefore

Types: deb deb-src

URIs: mirror+file:///etc/apt/mirrors/debian.list

Suites: bookworm bookworm-updates bookworm-backports

Components: main

Types: deb deb-src

URIs: mirror+file:///etc/apt/mirrors/debian-security.list

Suites: bookworm-security

Components: main

After

Types: deb deb-src

URIs: mirror+file:///etc/apt/mirrors/debian.list

Suites: bookworm bookworm-updates bookworm-backports

Components: main contrib non-free non-free-firmware

Types: deb deb-src

URIs: mirror+file:///etc/apt/mirrors/debian-security.list

Suites: bookworm-security

Components: main contrib non-free non-free-firmwareNow we can run nvidia-detect

sudo apt update

sudo apt -y install nvidia-detect

nvidia-detectIt will output something like this

Detected NVIDIA GPUs:

01:00.0 3D controller [0302]: NVIDIA Corporation GK210GL [Tesla K80] [10de:102d] (rev a1)

Checking card: NVIDIA Corporation GK210GL [Tesla K80] (rev a1)

Your card is supported by the Tesla 470 drivers series.

It is recommended to install the

nvidia-tesla-470-driver

package.

Now Install Drivers

Add Needed Repositories

First, it is important to add the needed repositories to make this easy use

sudo apt install -y build-essential gcc software-properties-common apt-transport-https dkms curlSo, I am using Tesla K80 Graphics card so with Debian 12 I installed

sudo apt install -y firmware-misc-nonfree nvidia-tesla-470-driverMost will install this one

sudo apt install -y firmware-misc-nonfree nvidia-driverNow it is done we can test to see if it is working on the vm before using Docker

Test NVIDIA

Oh, before doing this it is recommended to restart. Many times the driver is not loaded yet due to another driver being in the way so a restart clears that all out. Then you can run the below command.

nvidia-smiIt will output something like

debian@cuda:~$ nvidia-smi

Mon Feb 12 08:39:42 2024

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 470.223.02 Driver Version: 470.223.02 CUDA Version: 11.4 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla K80 On | 00000000:01:00.0 Off | 0 |

| N/A 65C P0 58W / 149W | 0MiB / 11441MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

Time to Install Docker and Test

I am not going to show how to install Docker here as I have this one to do a Docker Install. So. look at that and come back here if you want to test Cuda in Docker.

Install Needed Docker Tools

curl -s -L https://nvidia.github.io/nvidia-docker/gpgkey | sudo tee /etc/apt/keyrings/nvidia-docker.key

curl -s -L https://nvidia.github.io/nvidia-docker/debian11/nvidia-docker.list | sudo tee /etc/apt/sources.list.d/nvidia-docker.list

sudo sed -i -e "s/^deb/deb \[signed-by=\/etc\/apt\/keyrings\/nvidia-docker.key\]/g" /etc/apt/sources.list.d/nvidia-docker.list

sudo apt update

sudo apt -y install nvidia-container-toolkit

sudo systemctl restart dockerPerform Docker Test

By executing below you can test the cuda in Docker

docker run --gpus all nvidia/cuda:12.1.1-runtime-ubuntu22.04 nvidia-smiShould output something like this...

==========

== CUDA ==

==========

CUDA Version 12.1.1

Container image Copyright (c) 2016-2023, NVIDIA CORPORATION & AFFILIATES. All rights reserved.

This container image and its contents are governed by the NVIDIA Deep Learning Container License.

By pulling and using the container, you accept the terms and conditions of this license:

https://developer.nvidia.com/ngc/nvidia-deep-learning-container-license

A copy of this license is made available in this container at /NGC-DL-CONTAINER-LICENSE for your convenience.

Mon Feb 12 10:37:01 2024

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 470.223.02 Driver Version: 470.223.02 CUDA Version: 12.1 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla K80 On | 00000000:01:00.0 Off | 0 |

| N/A 41C P8 29W / 149W | 0MiB / 11441MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

Well that is it now comes the uses. Such as AI. That is the whole reason I did this so I could build AI and test some ideas out.

Errors

Here are some of the errors that I am getting. I will update this once this error is corrected and it is not longer needed to roll the kernel and header back to load NVIDIA drivers.

dpkg: error processing package nvidia-tesla-470-driver (--configure):

dependency problems - leaving unconfigured

Processing triggers for libgdk-pixbuf-2.0-0:amd64 (2.42.10+dfsg-1+b1) ...

Processing triggers for libc-bin (2.36-9+deb12u4) ...

Processing triggers for initramfs-tools (0.142) ...

update-initramfs: Generating /boot/initrd.img-6.1.0-18-amd64

Processing triggers for update-glx (1.2.2) ...

Processing triggers for glx-alternative-nvidia (1.2.2) ...

update-alternatives: using /usr/lib/nvidia to provide /usr/lib/glx (glx) in auto mode

Processing triggers for glx-alternative-mesa (1.2.2) ...

Processing triggers for libc-bin (2.36-9+deb12u4) ...

Processing triggers for initramfs-tools (0.142) ...

update-initramfs: Generating /boot/initrd.img-6.1.0-18-amd64

Errors were encountered while processing:

nvidia-tesla-470-kernel-dkms

nvidia-tesla-470-driver

E: Sub-process /usr/bin/dpkg returned an error code (1)

typical errors that are see in current Debian 12 kernel 6.1.0-18-amd64

/var/lib/dkms/nvidia-tesla-470/470.223.02/build/make.log

ERROR: modpost: GPL-incompatible module nvidia.ko uses GPL-only symbol '__rcu_read_lock'

ERROR: modpost: GPL-incompatible module nvidia.ko uses GPL-only symbol '__rcu_read_unlock'

make[3]: *** [/usr/src/linux-headers-6.1.0-18-common/scripts/Makefile.modpost:126: /var/lib/dkms/nvidia-tesla-470/470.223.02/build/Module.symvers] Error 1

make[2]: *** [/usr/src/linux-headers-6.1.0-18-common/Makefile:1991: modpost] Error 2

make[2]: Leaving directory '/usr/src/linux-headers-6.1.0-18-amd64'

make[1]: *** [Makefile:250: __sub-make] Error 2

make[1]: Leaving directory '/usr/src/linux-headers-6.1.0-18-common'

make: *** [Makefile:80: modules] Error 2